How Microservices Communicate Exploring Protocols for Integration

How Microservices Communicate: Exploring Protocols for Integration

Decoding the Language of Microservices: Navigating Communication Protocols for Seamless Integration

Introduction:

In the fast-paced world of modern software development, the microservices architecture has emerged as a powerful paradigm for building scalable, resilient, and maintainable systems. Central to the success of microservices is their ability to communicate effectively with one another, enabling seamless integration and collaboration within the system. At the heart of this communication lies a diverse array of protocols, each designed to address specific challenges and requirements of inter-service communication.

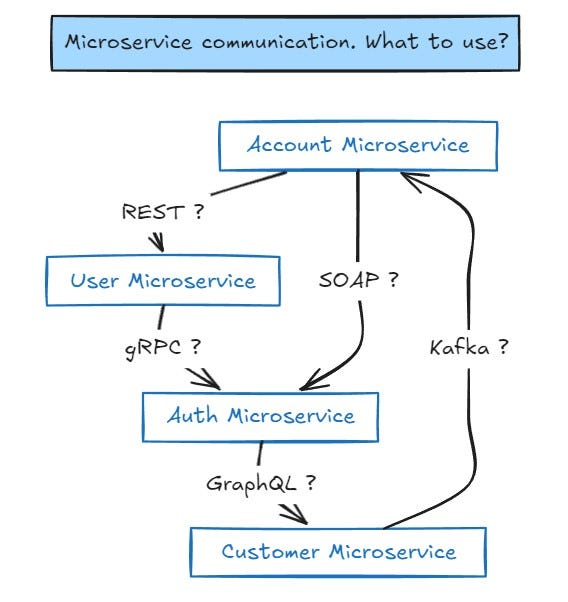

In this article, we embark on a journey to explore the intricate landscape of microservices communication protocols. We will delve into the fundamental concepts underlying these protocols, dissect their strengths and weaknesses, and provide insights into best practices for their selection and implementation. From RESTful APIs to messaging queues, from gRPC to GraphQL, we will uncover the rich tapestry of options available to developers and architects when designing microservices-based systems.

Whether you are a seasoned practitioner looking to deepen your understanding of microservices communication or a newcomer eager to navigate the complexities of modern software architecture, this article aims to equip you with the knowledge and insights needed to harness the full potential of microservices in your projects. Join us as we unravel the mysteries of microservices communication protocols and pave the way for smoother, more efficient interactions between your services.

When it comes to communication between microservices, several protocols play crucial roles, each with its own set of advantages and disadvantages. Let’s explore some of the main protocols along with their pros and cons:

RESTful APIs (Representational State Transfer):

RESTful APIs (Representational State Transfer) are a cornerstone of modern web development, offering a straightforward and widely adopted approach to building web services. At its core, RESTful architecture relies on a few key principles to design networked applications, including statelessness, uniform resource identification, and the use of standard HTTP methods.

Main Features:

Statelessness: One of the fundamental principles of REST is statelessness, meaning that each request from a client to the server must contain all the information necessary to understand and fulfill it. This design approach simplifies server implementation and enhances scalability, as servers do not need to maintain client state between requests.

Resource-Based: RESTful APIs are built around resources, which are identified by unique URLs. These resources represent entities in the system, such as users, articles, or products. Clients interact with these resources using standard HTTP methods like GET, POST, PUT, and DELETE, allowing for CRUD (Create, Read, Update, Delete) operations.

Uniform Interface: RESTful APIs follow a uniform interface, which simplifies communication between clients and servers. This interface is based on standard HTTP protocols, making it easy to understand and work with. Additionally, RESTful APIs often use hypermedia links to navigate between related resources, further enhancing discoverability and flexibility.

Example:

Consider an e-commerce application that exposes a RESTful API for managing products. Here’s how some of the main features might be implemented:

Resource URL: Each product in the system is represented by a unique URL, such as /products/{id}, where {id} is the identifier of the product.

HTTP Methods:

GET /products: Retrieves a list of all products.

GET /products/{id}: Retrieves details of a specific product.

POST /products: Creates a new product.

PUT /products/{id}: Updates an existing product.

DELETE /products/{id}: Deletes a product.

Resource Representation: Product information is typically represented in JSON or XML format in the request and response bodies, allowing for easy parsing and manipulation by clients and servers.

Benefits:

Simplicity: RESTful APIs are relatively simple to design and implement, making them accessible to developers of all levels of expertise.

Compatibility: RESTful APIs leverage standard HTTP protocols, ensuring compatibility with a wide range of platforms, frameworks, and tools.

Scalability: The stateless nature of RESTful APIs makes them inherently scalable, as servers can easily handle a large number of concurrent requests without maintaining client state.

Challenges:

Over-fetching/Under-fetching: Clients may receive more or less data than needed, leading to inefficient use of network resources.

Lack of standardized error handling: Error handling can vary between implementations, leading to inconsistencies in API behavior.

Overall, RESTful APIs provide a robust and flexible foundation for building web services, offering a balance of simplicity, scalability, and compatibility that makes them a popular choice for developers worldwide.

Pros:

Simplicity: RESTful APIs are easy to understand and implement, making them widely adopted.

Stateless: Each request from a client contains all the information needed to fulfill it, making the system stateless and scalable.

Compatibility: Works well with HTTP, which is a ubiquitous protocol supported by most platforms and frameworks.

Cons:

Over-fetching/Under-fetching: Clients might receive more or less data than needed, leading to inefficient use of network resources.

Lack of standardized error handling: Error handling can vary between implementations, leading to inconsistencies.

gRPC (Remote Procedure Call):

gRPC, which stands for Remote Procedure Call, is a high-performance and language-agnostic framework developed by Google. It enables efficient communication between distributed systems by allowing clients to invoke methods on remote servers as if they were local procedures. Unlike traditional web APIs, which often rely on textual formats like JSON or XML for data interchange, gRPC uses Protocol Buffers, a binary serialization format, to encode data, resulting in faster and more efficient communication.

Main Features:

Efficient Serialization: gRPC uses Protocol Buffers (protobuf) as its default serialization mechanism. Protocol Buffers are a binary format that offers efficient encoding and decoding of structured data, resulting in smaller message sizes and faster transmission over the network compared to textual formats like JSON or XML.

Bidirectional Streaming: gRPC supports bidirectional streaming, allowing both the client and server to send a stream of messages to each other asynchronously. This feature is particularly useful for use cases such as real-time communication, telemetry, and data processing pipelines.

Strongly Typed Interfaces: gRPC uses Protocol Buffers to define service interfaces and message types in a language-neutral way. These interface definitions are then compiled into code for various programming languages, providing strongly typed APIs and automatic serialization/deserialization of data.

Example:

Consider a distributed system for managing user authentication and authorization using gRPC. Here’s how some of the main features might be implemented:

Service Definition: Define gRPC services for user authentication and authorization, including methods like AuthenticateUser and AuthorizeAccess.

Protocol Buffers: Define message types for representing user credentials, authentication tokens, and authorization responses using Protocol Buffers.

Bidirectional Streaming: Implement bidirectional streaming for real-time communication between clients and servers, allowing servers to push updates or notifications to clients as needed.

Strong Typing: Generate client and server code from the Protocol Buffers definition, providing strongly typed APIs for interacting with the authentication and authorization services in multiple programming languages.

Benefits:

Performance: gRPC offers high-performance communication through efficient binary serialization and support for bidirectional streaming, making it well-suited for latency-sensitive and high-throughput applications.

Language Agnostic: gRPC supports multiple programming languages, allowing clients and servers to be implemented in different languages while still communicating seamlessly.

Automatic Code Generation: gRPC provides tools for automatic code generation from Protocol Buffers definitions, reducing the boilerplate code required to implement communication between services.

Challenges:

Complexity: Setting up gRPC and understanding its concepts, such as Protocol Buffers and bidirectional streaming, can be more complex compared to traditional web APIs like REST.

HTTP/2 Dependency: gRPC relies on HTTP/2 for communication, which may not be supported in all environments or by all clients and servers.

Overall, gRPC offers a powerful and efficient framework for building distributed systems, enabling developers to create fast, scalable, and language-agnostic services with ease. Its support for bidirectional streaming, strong typing, and automatic code generation make it particularly well-suited for modern microservices architectures and real-time communication scenarios.

Pros:

Performance: gRPC offers high-performance, bidirectional streaming, and efficient serialization using Protocol Buffers.

Strongly Typed: gRPC uses Protocol Buffers for data serialization, providing strong typing and automatic code generation in multiple languages.

Language agnostic: Supports multiple programming languages, enabling interoperability between different services.

Cons:

Complexity: Setting up gRPC can be more complex compared to REST due to its binary protocol and need for additional tooling.

HTTP/2 Dependency: Requires HTTP/2 support, which may not be available in all environments.

Message Queues (e.g., RabbitMQ, Apache Kafka):

Message Queues, exemplified by systems like RabbitMQ and Apache Kafka, are essential components in building distributed systems, particularly in scenarios where asynchronous communication and decoupling of services are paramount. They provide a robust infrastructure for passing messages between components of an application, ensuring reliable delivery, scalability, and fault tolerance.

Main Features:

Asynchronous Communication: Message queues facilitate asynchronous communication between services, allowing components to send and receive messages without needing to be online at the same time. This decoupling enables services to operate independently and asynchronously, improving system resilience and responsiveness.

Reliable Message Delivery: Message queues ensure reliable message delivery by storing messages persistently until they are successfully processed by the receiving component. This ensures that messages are not lost even in the event of failures or network issues.

Scalability: Message queues are designed to handle large volumes of messages efficiently, making them highly scalable. They typically support features such as message partitioning, load balancing, and clustering to distribute message processing across multiple nodes and scale horizontally as the system grows.

Example:

Consider an e-commerce application that uses RabbitMQ as its message queue for processing orders. Here’s how some of the main features might be implemented:

Order Processing: When a customer places an order on the website, the order details are sent to a RabbitMQ queue for processing.

Worker Consumers: Worker processes (consumers) connected to the RabbitMQ queue continuously monitor for new orders. When an order is received, a worker processes it by updating inventory, calculating shipping costs, and sending confirmation emails.

Message Durability: RabbitMQ ensures message durability by persistently storing messages on disk, even in the event of a server crash or restart, to guarantee reliable delivery.

Scaling: As the application’s order volume increases, additional worker processes can be added to distribute the workload across multiple nodes and scale horizontally.

Benefits:

Decoupling of Services: Message queues decouple producers and consumers of messages, allowing services to operate independently and asynchronously, leading to more resilient and scalable architectures.

Reliable Communication: Message queues ensure reliable delivery of messages, even in the face of failures or network issues, by persistently storing messages until they are successfully processed.

Scalability: Message queues are highly scalable and can handle large volumes of messages efficiently, making them suitable for high-throughput applications and distributed systems.

Challenges:

Complexity: Setting up and managing message queues can add complexity to the system architecture, requiring careful consideration of message routing, queue configuration, and error handling.

Message Ordering: Maintaining strict message ordering can be challenging in distributed systems, especially when messages are processed concurrently by multiple consumers.

Single Point of Failure: The message queue itself can become a single point of failure in the system. To mitigate this risk, message queues often support features like replication, clustering, and high availability configurations.

Overall, message queues play a critical role in modern distributed systems, providing a reliable and scalable infrastructure for asynchronous communication between services. Whether it’s processing orders in an e-commerce application or handling real-time data streams in a big data pipeline, message queues offer a flexible and robust solution for building resilient and scalable architectures.

Pros:

Asynchronous Communication: Enables decoupling of services and supports asynchronous communication, improving system resilience and scalability.

Guaranteed Delivery: Provides mechanisms for reliable message delivery, ensuring messages are not lost.

Load Balancing: Can distribute messages across multiple consumers for load balancing.

Cons:

Complexity: Setting up and managing message queues can add complexity to the system architecture.

Message Ordering: Maintaining strict message ordering can be challenging in distributed systems.

Message Broker as a Single Point of Failure: If the message broker fails, it can disrupt communication between services.

GraphQL:

GraphQL is a query language for APIs that enables clients to request only the data they need, allowing for more efficient and flexible communication between clients and servers. Unlike traditional RESTful APIs, which expose a fixed set of endpoints that return predefined data structures, GraphQL allows clients to specify the shape and structure of the data they require, empowering them to retrieve exactly what they need in a single request.

Main Features:

Flexible Querying: GraphQL enables clients to request precisely the data they need, reducing over-fetching and under-fetching of data compared to traditional RESTful APIs. Clients can specify the fields they want to retrieve, as well as any relationships or nested data structures they require.

Strong Typing: GraphQL uses a type system to define the structure of the data exposed by the API. This enables clients to discover and understand the available data and operations through introspection, leading to better tooling, documentation, and type safety.

Real-time Updates: GraphQL supports real-time updates through subscriptions, allowing clients to subscribe to changes in the data and receive updates in real-time as they occur. This feature is particularly useful for applications requiring live data updates, such as chat applications or real-time analytics dashboards.

Example:

Consider a social media application that uses GraphQL to fetch user profiles and posts. Here’s how some of the main features might be implemented:

Querying User Profiles: Clients can send a GraphQL query specifying the fields they want to retrieve from a user profile, such as their name, bio, and profile picture. The server responds with only the requested fields, optimizing data transfer and reducing network overhead.

Fetching Posts: Clients can query for a user’s posts along with associated data, such as the post content, author, and comments. By specifying the desired fields and relationships in the query, clients can retrieve all the necessary data in a single request, avoiding multiple round trips to the server.

Real-time Updates: Clients can subscribe to changes in the user’s posts using GraphQL subscriptions. When a new post is created or an existing post is updated, the server sends a real-time update to subscribed clients, ensuring they always have the latest information.

Benefits:

Efficient Data Retrieval: GraphQL enables clients to request only the data they need, reducing over-fetching and under-fetching of data compared to traditional RESTful APIs.

Strong Typing and Introspection: GraphQL’s type system and introspection capabilities provide a clear and self-documenting API, leading to better tooling, documentation, and type safety.

Real-time Updates: GraphQL supports real-time updates through subscriptions, allowing clients to receive live updates as data changes occur on the server.

Challenges:

Learning Curve: Adopting GraphQL requires learning a new query language and understanding its concepts, which can be challenging for developers accustomed to RESTful APIs.

Complexity: Implementing a GraphQL server can be more complex compared to traditional RESTful APIs, especially for complex schemas and operations.

Caching and Performance: Caching and performance optimization strategies may differ from those used with RESTful APIs due to the dynamic nature of GraphQL queries and the potential for over-fetching of data.

Overall, GraphQL offers a powerful and flexible approach to building APIs, enabling efficient communication between clients and servers while providing real-time updates and strong typing. Whether it’s fetching user profiles in a social media application or querying complex data structures in a large-scale system, GraphQL empowers developers to build more efficient and flexible APIs that better meet the needs of modern applications.

Pros:

Flexible Querying: Allows clients to request only the data they need, reducing over-fetching and under-fetching.

Strong Typing: Provides a strongly typed schema, enabling better tooling and documentation.

Versioning: Supports schema versioning, allowing gradual changes without breaking existing clients.

Cons:

Learning Curve: Requires understanding of the GraphQL query language and schema definition.

Increased Complexity: Implementing a GraphQL server can be more complex compared to REST, especially for complex schemas and operations.

Each protocol offers unique benefits and challenges, and the choice depends on factors such as performance requirements, development team expertise, and specific project needs. By carefully considering these factors, developers can select the most suitable communication protocol for their microservices architecture, ensuring efficient and robust communication between services.

Conclusion

In the realm of microservices architecture, selecting the right communication protocol is a critical decision that can profoundly impact the efficiency, scalability, and maintainability of the system. Whether it’s RESTful APIs, gRPC, GraphQL, or message queues like RabbitMQ or Apache Kafka, each protocol brings its own set of strengths and considerations to the table.

In concluding the selection process, it’s essential to consider several key factors:

Functional Requirements: Assess the specific needs of your application, including data transfer patterns, real-time requirements, and support for bidirectional communication. Choose a protocol that aligns closely with these requirements to ensure optimal performance and functionality.

Performance: Evaluate the performance characteristics of each protocol, including factors such as message size, serialization overhead, and network latency. Consider the scalability requirements of your system and select a protocol that can efficiently handle the expected workload.

Developer Experience: Consider the familiarity and expertise of your development team with each protocol. Choose a protocol that aligns with your team’s skills and preferences to facilitate rapid development and minimize the learning curve.

Interoperability: Assess the interoperability of each protocol with existing systems and technologies in your ecosystem. Choose a protocol that seamlessly integrates with other services, libraries, and frameworks to avoid compatibility issues and simplify integration efforts.

Security: Evaluate the security features and capabilities of each protocol, including support for encryption, authentication, and access control. Choose a protocol that provides robust security mechanisms to protect sensitive data and prevent unauthorized access.

Community Support and Ecosystem: Consider the availability of libraries, tools, and documentation for each protocol within the developer community. Choose a protocol with a vibrant ecosystem and strong community support to leverage existing resources and accelerate development efforts.

By carefully considering these factors and weighing the trade-offs between different protocols, you can make an informed decision that aligns with the specific needs and objectives of your microservices architecture. Remember that there is no one-size-fits-all solution, and the optimal protocol may vary depending on the unique requirements and constraints of your project. With thoughtful evaluation and strategic planning, you can select a microservices communication protocol that sets your system up for success in the long run.